Trigger data collection API

Learn how to trigger data collection using the Web Scraper API with options for discovery and PDP scrapers. Customize requests, set delivery options, and retrieve data efficiently.

How It Works

By default, scraping requests are processed asynchronously. When a request is submitted, the system begins processing the job in the background and immediately returns a snapshot ID. Once the scraping task is complete, the results can be retrieved at your convenience by using the snapshot ID to download the data via the API. Alternatively, you can configure the request to automatically deliver the results to an external storage destination, such as an S3 bucket or Azure Blob Storage. This approach is well-suited for handling larger jobs or integrating with automated data pipelines.Body

The inputs to be used by the scraper. Can be provided either as JSON or as a CSV file:

Example: [{"url":"https://www.airbnb.com/rooms/50122531"}]

A CSV file, in a field called

data

Example (curl): data=@path/to/your/file.csv

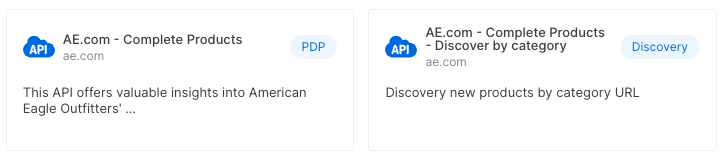

Web Scraper Types

Each scraper can require different inputs. There are 2 main types of scrapers:1. PDP

These scrapers require URLs as inputs. A PDP scraper extracts detailed product information like specifications, pricing, and features from web pages2. Discovery

Discovery scrapers allow you to explore and find new entities/products through search, categories, Keywords and more.

Request examples

PDP with URL input

Input format for PDP is always a URL, pointing to the page to be scraped.

Discovery input based on the discovery method

discovery can vary according to the specific scraper. Inputs can be:

Authorizations

Bearer authentication header of the form Bearer <token>, where <token> is your auth token.

Query Parameters

Dataset ID for which data collection is triggered.

"gd_l1vikfnt1wgvvqz95w"

List of output columns, separated by | (e.g., url|about.updated_on). Filters the response to include only the specified fields.

"url|about.updated_on"

Set it to "discover_new" to trigger a collection that includes a discovery phase.

discover_new Specifies which discovery method to use. Available options: "keyword", "best_sellers_url", "category_url", "location" and more (according to the specific API). Relevant only for collections that include a discovery phase.

Include errors report with the results.

Limit the number of results per input. Relevant only for collections that include a discovery phase.

x >= 1Limit the total number of results.

x >= 1URL where the notification will be sent once the collection is finished. Notification will contain snapshot_id and status.

Webhook URL where data will be delivered.

Specifies the format of the data to be delivered to the webhook endpoint.

json, ndjson, jsonl, csv Authorization header to be used when sending notification to notify URL or delivering data via webhook endpoint.

By default, the data will be sent to the webhook compressed. Pass true to send it uncompressed.

Body

The body is of type Only inputs · object[].

Response

Collection job successfully started

The response is of type object.